Rough Notes From RSA 2018 Conference Sessions

My notes from RSA 2018 sessions and labs. I've sanded off the rough-edges from my raw notes. Might still be a bit 'bumpy'

Day 1: DevSecOps day

- 80% to 90% of your 'in house' code is OSS modules your devs have assembled

- 12% of OSS modules have known-vulnerabilities that a lot of companies don't know about (because they aren't looking)

- Do you have an Open Source licensing/usage policy that guides or governs the use of OSS?

- Security is everybody's problem, which makes it hard for anyone to accept responsibility.

- My thoughts on this: How do we incentivize secure behavior? Policies and procedures should be designed with the users/people in mind. Managemnt should work to provide the right incentives to help people achieve the goals of security

Day 2

How to avoid getting slaughtered by vulnerability management

- Vulnerability mangaement is purely an Operations exercise (it should be operationalized and not be handled 'by exception')

- Queryable infrastructure, APIs everywhere: that's the only way ops can keep on top of the burden of management

- The NSA is successful at infiltration since they know the environment better than those that created it

- A lot of organizations say they'll fix vulnerabilities that meet a certain risk/severity score. This presentation argues that we should focus efforts on patching to critical assets first. Not a new idea, but a good one to help prioritize patching effort

How do you justify shutting down a factory for a security vulnerability? What data would you use to make your case? Make sure 'the security guys' aren't stopping the business for no good reason. It's Security's job to have good data and prioritize risk. If the risks aren't worth the shutdown, InfoSec has lost credibility

- Focus on frequency of exploitation

- Look at the cost an attacker incurs to perform an attack

Be metric focused, but have those metrics mean something. An example from the presentation: The national debt is a big number, but ultimately meaningless. Attach a number next to your family's share of the debt and it becomes much more meaningful

Protecting containers from host-level attacks (panel):

- Intel SGX can help to protect containers from a compromised host, sort of

- It can't prevent or stop exploitation of hardware based issues (like spectre/meltdown)

- 'remote attestation' can supposedly use a certificate to show processes/services 'off box' that SGX is used to protect the server they are connecting to. No details about this were presented

- My question: Can malware use this to 'go dark' on my own machine and make memory forensics a challenge?

Day 3

8:00 - [AIR-W02] Predicting Exploitability -- Forecasts for Vulnerability Management

Presenter: Michael Roytman - Chief Data Scientist @ Kenna Security

Class Blurb from the pamphlet:

"Security is overdue for actionable forecasts. With some open source data and a clever machine learning model, Kenna can predict which vulnerabilities attackers are likely to write exploits for."I arrived late at this session after ditching another session that was pretty 'meh'. What I saw here was pretty cool, though I need to bone-up on Machine Learning!

Data sources used by his ML model:

- NVD

- Common Platform Enumeration

- ExploitDB

- Metasploit

- Elliot / Immunity's CANVASInteresting notes:

-

77% of vulnerabilities have no-known exploit

- If your ML model isn't right at least 77% of the time, it's worse than just 'guessing'

- Try using Amazon ML which gives you good results for cheap

-

The ML model used here shows IF something is likely to be exploited as opposed to trying to guess 'when'

- Patching by vendors has an impact on whether or not an exploit is developed, but not necessarily as much as you might think (since many environments run unpatched)

- ML models can be very specific with few false positives (yet not returning everything that could be of interest) or they can be broad and return lots of records and have a correspondingly higher false-positive rate

A possible analogy: Think of patches as vaccines. Do you get every vaccine? Vaccines can be time-limited or have side effects that may not be desirable

Another thought: with the data used by this model, could attackers could use the predictions to focus on vendors and products that are more likely to be exploitable?

9:00 - [LAB4-W03] Foundations for a Strong Threat Intelligence Program

Presenters:

- Jeff Bardin - Chief Intel Officer @ Treadstone71

- Anthony Nash - Manager @ Symantec Intelligence Services

Class Blurb from the pamphlet:

"The session will cover the key ingredients proven to efficiently create a cyberthreat intel program, and will also cover the methods to run a guided assessment establishing your program in minimal time"I had to leave about a half-hour after this started to catch a conference call at work. Here are the notes of what I found useful:

-

Threat Intelligence is about 'staying left of the

boom' (Know in advance, prevent a disaster) -

Data, Information and Intelligence are different things

- Data: raw-datapoint. No weight or score assigned (like 'blue car', 'IPv4 8.8.8.8', 'TCP Port 4444')

- Information: Datapoint with context and analysis (like 'Metasploit activity detected on port 4444 originating from IPv4 8.8.8.8')

-

Intelligence: This is the product of the Threat Intel process. Information correlated, analyzed and synthesized into something actionable (Who did what, where and how? Or what will they do?

Key point: This is all DATA DRIVEN. There is no room for hunches. Threat Intel is about Rigor, Tradecraft and Data. We need evidence based knowledge

As a company, if you are serious about threat intel you should:

-

Make sure your intelligence people are removed from / not a part of computer incident response. Intel guys won't be happy in a CSIRT environment since that is so far removed from what their goals, objectives and talents are.

- CSIRT: Hamster wheel of exhaustion

- Threat Intel: Feeds risk assessment & remediation/treatment approaches to decision makers

-

Have enough money. Intelligence is expensive (specific number mentioned: more than $200k/yr)

-

Employ 3 to 5 outside threat intelligence vendors, since inevitably there won't be any one that gives you everything you need.

-

Why is attribution important?

- It's more than just having someone we can point our finger at

- It's about understanding motivations and predicting what else they may try to do to your business

Considerations in Threat Intel:

Environment --> Goals --> Capabilities/Limitations --> Prioritize Sources --> Consumers

-

Environment

- Where do we operate?

- What is our scope?

- Where are / what are our important assets?

-

Goals

- What do we want out of our threat intel program?

- Why do we want attribution?

- Set goals appropriately so you don't chase "Shiney" things that have no real risk impact on your business

-

Capabilities/limitations

- What is our budget?

- What is our authorization? Can we get all the data we need to know enough about where our risks are to prioritize our limited resources?

- What is our authority? Do we have to step aside even if we know a threat is imminent?

- Who can we involve, who can't we involve?

- What are the sacred cows?

-

Prioritize Sources

- Once you have threat intelligence data coming in, you'll need to gauge reliability and applicability. Not all sources are equal in quality

- What are the IOCs (no hunches, just data)

- How do we vet new sources? How do we do this on an on-going basis?

- How BIASED are our sources? [Another good reason to have multiple threat intel sources]

- How does OSINT come into play?

Types of Threat Intel:

Strategic - How does this impact our Business?

Operational - How does this affect our SOC/NOC?

Tactical - For analysts dealing with day in day out IOCs

Technical - Security/product engineering, nuts and bolts focusDay 4

9:00 - [LAB2-R03] Learning Lab: Web Application Testing -- Approach and Cheating to Win

Presenters:

- Jim McMurry - CEO @ Milton Security Group

- Lee Neely - Sr Cyber Analyst @ Lawrence Livermore National Lab

Description from the pamphlet:

"Applications built from a collection of assembled code segments need to be assessed quickly and easily. This talk will break down the pen-testing process to a manageable model anyone can use to cheat to win."From what I saw of the class, it was well-laid out and helped guide participants though a series of exercises designed to help them better understand the approaches taken and gain familiarity with the tools. I gained the most from learning about the OWASP ZAP tool (which I've been using for years), and I'm astounded with how much power is in the tool."

There were a fair number of technical difficulties experienced in the class which caused the instructors to switch to 'plan b' which was running the vulnerable web applications (mutillidae) locally on the chromebooks they procured for the class. There were issues with the ESX server they used to host the 'victim' machines. (apparently, this is usually a 5 day course that they teach which is condensed down to 2 hours!). I appreciate that the instructors had a fallback option.

The instructors are pushing this methodology:

Recon --> Mapping --> Discovery --> Exploitation

(What they'd _really_ like to see is for us to spend no more than a couple minutes on each step and use this as a continuous cycle that feeds-in on itself to generate more results)Excercises and notes:

-

Lab 1: Password Fuzzing with Zap

-

Use the cewl tool to gather a list of words off of the mutillidae vulnerable web page

(cewl -a -w /path/to/generated_words.file http://ip/page)

-

Use ZAP to fuzz-test the login page with random passwords until it lets you in:

- Use zap to browse the site

- Find the request in the tree view where the username and password are submitted

- Right-click on that request, and select tools -> fuzzing

- Configure the USERNAME parameter to be a static 'string'

- Configure the PASSWORD parameter to use the words in the words list file

Screenshots, since its hard to type and show very well for this tool (ZAP is powerful, but the UX is just not there...):

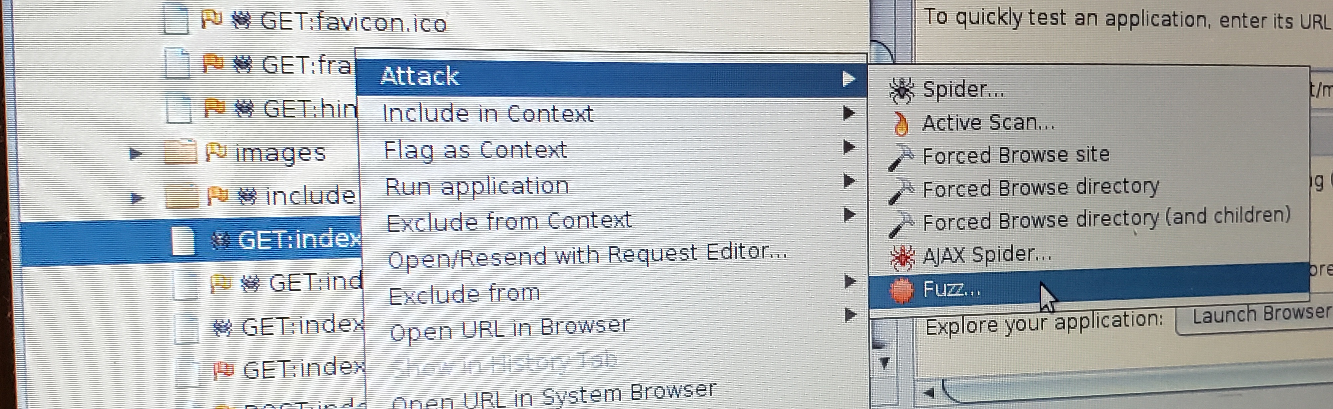

(This shows how to get o the Fuzz... menu)

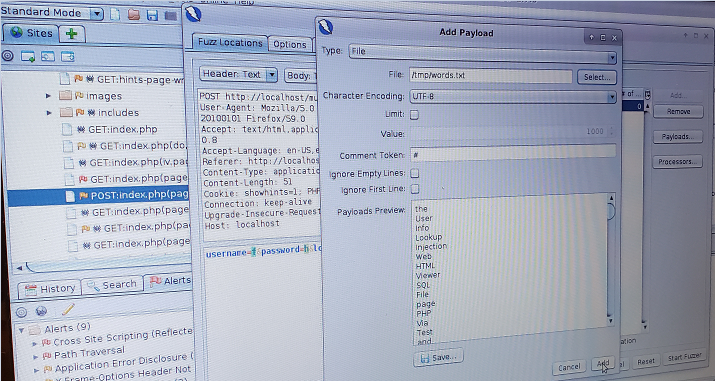

(This shows how to get o the Fuzz... menu) (This shows that once you are at the 'fuzz' option, you need to select just the VALUE of the password parameter, then click 'add', then change the 'type' to File so you can select he word list to be used in fuzzing the password)

(This shows that once you are at the 'fuzz' option, you need to select just the VALUE of the password parameter, then click 'add', then change the 'type' to File so you can select he word list to be used in fuzzing the password)NOT Pictured (but still important): Watch the response size as Zap fuzzes for credentials. When you find a size that is different than the rest, you know you've found the password for the user!!!

-

-

Lab 2: SQL Injection with SqlMap

- Mutillidae is a vulnerable web app, so you can do things like type this in the username field to bypass auth: admin' -- '

- SqlMap makes this quick, once you know what the login page is.

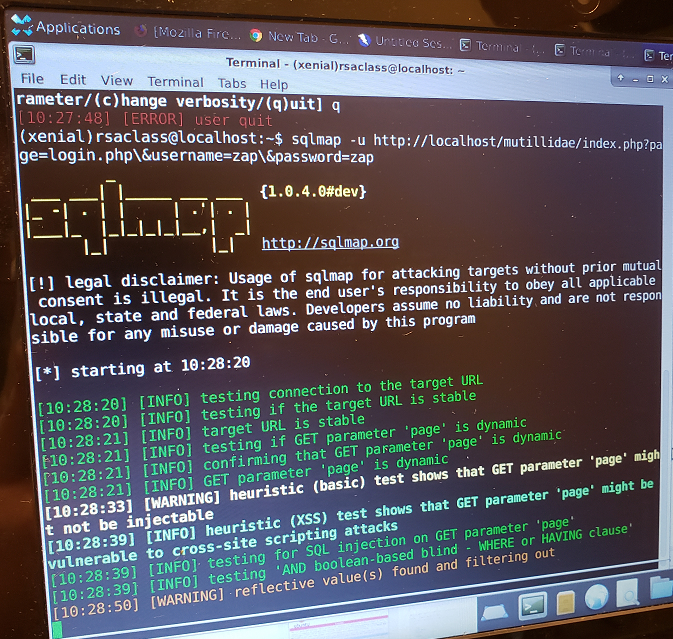

- This picture shows how to run SqlMap and define the web application's input parameters so SqlMap knows where to fuzz:

(Important Note: Be sure to escape the ampersands. Escaping the ampersands allows SqlMap to know what fields are input parameters worth fuzzing for!)

(Important Note: Be sure to escape the ampersands. Escaping the ampersands allows SqlMap to know what fields are input parameters worth fuzzing for!)

Sample command line (taken from the image for reference):

sqlmap -u http://localhost/mutillidae/index.php?page=login.php\&username=zap\&password=zap

** I can only suppose that I'd need to escape the questionmark if I needed to fuzz the first parameter...

Note on SQLMap: When it finishes, you will see results in the ~/.sqlmap directory (corresponds to the user that runs the tool)!

-

Lab 3/4: Passive & Active Recon

Tools:

-

Autosploit (github.com/nullarray/autosploit)

- This is the 'cheating' part of the presentation. They were excited to share this tool which automatically looks for exploits and tries to PWN whatever hosts you point it at. Sounds like it's pretty effective(?)

- You can give your 'workspace' a name via the CLI

- Since it's based on metasploit it will want an LHOST and LPORT where compromised systems will call-home to.

-

Whois

-

dns

-

shodan

-

google-dorking

-

pastebin

-

haveibeenpwned

-

recon-ng - This is supposed to 'keep a notebook' for you(?)

-

12:30 - [LAB2-R10] Learning Lab: Practical Malware Analysis CTF

Presenters:

-

Sam Browne - Instructor @ CCSF [City College of San Francisco]

Instructor Site: https://samsclass.info/126/126_RSA_2018.shtml

- Dylan Smith - Student @ CCSF [City College of San Francisco]

This hands-on lab was based on the book by the same name. Sam teaches the malware analysis course at the local city college. There was a CTF aspect to the course, where you get points based on how many questions you answer correctly along with a scoreboard.

The instructor has a goal of showing that you don't NEED tools like IDA Pro or other disassemblers to have a solid grasp on how to figure out how most malware works. You can use tools like ProcMon, WireShark, ProcessExplorer and a few others to get a handle on what is happening on your system

Highlights from the lab:

- 90% of malware analysis is understanding Windows software development and how developers mis-use undocumented Windows Kernel features.

- You can't trust anything 100% (not your tools, not the OS). You should have a lot of tools at your disposal so if malware evades one, it can (hopefully) be detected by another

Analysis process notes:

-

Use bintext to find interesting strings in executables

-

Use dependency walker to find what API calls your exe uses. BinText / PEView can show some of this, too.

- Windows API conventions:

- Method ends in 'A' returns Ascii

- Method ends in 'W' returns a wide character

- Method ends in 'EX' it returns 'extended' stuff(?)

- Look for mutexes and other things that help malware avoid re-infecting a host

- Unpack exes if you can to learn more about the executable code

- Static and dynamic analysis processes can be used

- Look for 'orphan' processes, or processes that have had their insides replaced with malicious code (strings in memory vs on-disk)

- Run executables inside a debugger so you can poke/prod and manipulate the malware into giving up its secrets. OllyDbg was used in the course

- Windows API conventions:

-

Kernel debugging with WinDBG

You need a kernel debugger when you want to do driver stuff or see how the windows kernel is affected by software/malware

-

Within WinDBG:

!process - shows you a list of processes !process 0 0 - CLI version of task mangaer .help process - shows you help for commands lm - list loaded modules nt is the kernel, always there dd nt | da nt | - These show memory used in kernel db nt | x nt!* finds all functions in ntoskrnl x nt!*Create* finds all symbols like this dt nt!_DRIVER_OBJECT shows a driver structure so you can see what's inside

-

-

SSDT Hooking (System Service Descriptor Table, aka 'Global Offset Table' in Linux)

- This lets you 'patch' executables independently of the OS

- Malware can use this for things like keyloggers to intercept system calls

-

75% of BSODs are caused by Drivers using improper or buggy hooking

(Supposedly Microsoft has a new interception approach they recommend, but we didn't get into it in the class)

Day 5

9:00 - [GRC-F01] Security Automation Simplified via NIST OSCAL: We're Not in Kansas Anymore

Presenters:

- Anil Karmel - CEO [C2 Labs]

- David Waltermire - Security Automation Architect [NIST]

This session introduced me to OSCAL [Open Security Controls Assessment Language]. Which, when completed, aims to bring order to the chaos that is security assessment across various governance and controls standards which can at times be contradictory or mutually exclusive.

The standard should be out at 1.0 in 2019. This aims to be a 'standard of standards' (insert relevant XKCD here!) to help organizations build profiles where multiple standards apply (think: NIST 800-53, COBIT, ISO 27002, PCI-DSS, etc...)

I think this could be pretty interesting, once it hits a stable release. For now, this is the future and not the present.

10:15 - [HUM-F02] How the Best Hackers Learn Their Craft

Presenter:

- David Brumley - CEO [ForAllSecure] {Also an instructor at Carnegie Mellon University (CMU)}

This session was well crafted and, I think, helps to show how anyone can achieve the Hacker Perspective. David has been building top hacking teams at the University level for the last 7 years or so and has a wealth of experience in setting up a pipeline for recruiting and training top hackers. His teams have gone on to win DEF CON competitions and other competitive challenges around the world.

The goal of this presentation is to impart lessons learned from his years of experience developing Hacker talent so we can learn from the most effective strategies he can offer.

Key phrase that I'd love if my 4 year old identified with: "I don't know everything... but I can figure it out!"

Highlights/Key notes:

-

Brilliant hackers don't just "emerge" most of the time, they have trained over time from low (or no) levels of competence to the expert levels

-

David has had to develop a pipeline to develop talent as most of his students at CMU graduate after 4 years. The hacking team needs fresh blood to replenish itself over time.

- This includes liberal use of CTFs to encourage high school students into the field

- Some of his top-competitors had never had an interest in security or hacking before being exposed to challenges in High School, which just goes to confirm that you don't need to be 'born that way' to pick it up.

-

CTF (Capture the Flag) events provide the greatest value in helping people develop an interest and progress through the ranks of hacker expertise

-

Beginner to intermediate hackers gain the most from thoughtful 'Jeopardy'-style CTFs that give them open ended ability to explore, understand and solve technical challenges

-

Advanced to Expert hackers gain the most from 'attack/defend'-style CTFs that pit them against each other and let them learn in a no-holds-barred, survival of the fittest type match.

-

Beginners to Intermediates are NOT well servied by the attack/defend style CTFs as the goal is to prey upon weaknesses and exploit them as hard and as often as you can. When your teams skillset is greatly mis-matched, it leads to heavy discouragement on the part of the weaker team/players.

-

-

The CTFs should:

-

Provide applied, deliberate practice. CTF problems or challenges should build on each other and, when completed in entirety, have allowed a participant to gain a greater or more broad understanding of how these different pieces fit together

-

Promote auto-didactic learning. The best thing someone can learn is that nobody has all the answers and that they should not be expected to HAVE all of the answers either. This is learning how to learn and gaining confidence in learning approach(es) that lead you to solutions

- The industry moves to fast to be reliant on authority figures or 'experts' anyway...

-

Encourage creative problem solving. While CTF questions should be tested for sanity and feasibility, the only requirement should be for a student provide the correct RESULT. They should not be bogged down in having to provide a comprehensive 'solution' to prove how they solved the problem since their own exploration and journey will lead them down a path that is more valuable than a lab report.

- There should be few constraints on a solution

- Check only the result

Specific CTF resources mentioned for those who want to use them or model programs off of them:

- picoctf

- microcorrumption

- hackcenter

- hack.im 2012

-

"Complete" pipeline I gathered from this presentation:

- Attract new candidates by making engaging, interesting CTFs (his target is high school students)

- Provide them with mentorship that consists of 'guard rails' and resources to promote creative and auto-didactic problem solving (applied, deliberate, practice)

- Funnel candidates down progressively more difficult challenges

- Have them mentor others.

- Have them write the next generation of CTF to continue the cycle

11:30 - [CXO-F03] Business Executive Fundamentals: How to Beat the MBAs at Their Own Game

Presenters:

- Jung Lee - Head of Certification Programs [CyberVista]

- Amjed Saffarini - CEO [CyberVista]

This session featured a securities analyst (Think SEC instead of InfoSec) and a CEO talking about how Executives and the Board of Directors think. The goal is to help security practitioners understand where the business is coming from so we can better align our message with company goals.

Key takeaways:

-

Companies care about risk and managing risk. As more risk is tied up in information, information systems and IT there is more overlap between technology and the core business.

- Something these guys have noticed over time is that conversations between executives and security are growing more frequent

- InfoSec guys don't normally have the foundations of an MBA to speak the Execs language and have had struggles in communicating risk, strategy and value

- They give presentations like this one at security conferences to help bridge the gap and inspire conversation

-

The purpose of company Executives (and the Board) is to protect, preserve and grow the business. They are accountable to shareholders (like your 401k plan) who want to preserve the businesses value (i.e. keep paying out dividends)

- If a company earns the markets trust, they can play a 'long game' and not have as much pressure to deliver on a quarterly basis

- Most companies don't have that kind of market trust, so they are on the hook to deliver value on a quarterly basis)

-

Large shareholders have a lot sway with companies (think 401k plans or investment groups like Vanguard). If a large investor decides to leave their position in a company, it causes a dip in the company stock price (even if the company does a "block trade" off the exchange)

-

Analysts have an outsized impact on the price of a company's stock. It's their job to get as transparent a look inside a company as they can to understand its fundamentals, how it works and what the leadership structure looks like/how they operate. Will the company be around next year? Will the company grow?

- Sometimes analysts can be a bit shady and try to time news to coincide with their own buying/selling activity

- Often times these guys are young, but they are in positions to grill the highest level executives

-

Company P/E ratios have everything to do with confidence in the business and its growth ability. Why pay 7x or 250x what a company makes each year unless you are confident they are going to grow at an acceptable rate?

-

The example given by Jung Lee (Securities Analyst) really helped put this in perspective for me:

Say you are in the market to purchase a rental property that generates $1,000 per month in revenue. How much would you be willing to pay for this property? Annually, you will generate $12,000 per year in gross-rent from your tenants. Would you pay a 7x markup? 30x?

The more you are willing to pay for the rental property (In multiples of gross annual earnings), the more confident you are that the property will increase in value over time.

So, my take-away is that if companies appear that they will grow over time it increases the value in owning a stake in the company. As a result, the overall value of the piece of the company you own now will increase over time.

-

Other Notes

-

Read and Understand as much as possible about how your business is structured, its goals, values, mission and customers as you can (10k statements, investor letters, etc...).

- Information Security should be laser focused on helping the business accomplish its objectives

- Read and understand as much as you can about the competition/other players in your space, too!

-

Don't just focus on 'decreasing costs' of Information Security. That doesn't do anything to help InfoSec get out of the 'cost center' box.

- To demonstrate real business value, InfoSec should try to get included in COGS (Cost of Goods Sold). COGS measures the total cost incurred by the company to sell one unit of whatever it is that it sells.

- If InfoSec can make it into the COGS category, it means that its seen as vital or critical to delivery of products or services in the organization

- The question to ask yourself is: How does Security sell the product? Does it contribute at all?

-

'Fungibility' of money- your shareholders can sell their position and buy into a competitor without much you can do about it. Value conscious investors refactor their portfolios to ensure they get the best value they can

-

Public traded companies (and companies in general) can either be top-line focused or bottom-line focused, depending on the type of business they are

-

The P/E Multiplier that people are willing to pay to get into the company helps you compare across businesses in the same sectors with the idea that a greater P/E ratio means that people are more confident in the growth of the business

-

Companies care a LOT about what others think (especially institutional investors and analysts). They also care about how their industry and their competition look, as this can weigh on how the company is valued.

- An argument that can be made for InfoSec if your competitors are also investing and/or touting it as part of their offerings

- Do analysts or institutional investors care about infosec? If not, then it will be hard

-

What competitors do impacts YOUR company's stock price. Did something bad happen to a competitor? When will it happen to you? How do you inspire confidence in shareholders, prospects and analysts that you are more secure or better?

- Perception is a critical factor: you could BE the most awesome, but how do you advertise this believably?

- Likewise, if a company is not the most awesome but comes across like it is, people will be swayed.