Ever Stale: A Tale of missed updates or how to upgrade GitLab from 12 to 14

I spent an hour reviewing my home lab setup and found an old internal gitlab instance running that I hadn't touched in some time. It was running GitLab-CE 12.10.13-ce.0 and was ready for an update! The latest version of GitLab at the time of this writing is 14.5.2-ce.0 which was too far to take in one jump. A complicating factor is that I've run this server since version 8.x using their publicly available docker image and have not built it out in a way that lends for easy upgrades. Fortunately I was able to apply the updates, not without some drama.

Today's ‘modern’ approach to application deployment can leave you in a perpetually stale state if you aren't able or willing to keep up with the relentless onslaught of patches, updates and troubleshooting patch/update failures.

References

- Upgrading GitLab [docs.gitlab.com]

- Upgrading to later 14.Y releases [docs.gitlab.com]

- Batched background migrations [docs.gitlab.com]

- No such file or directory @ realpath_rec - /opt/gitlab/sv/redis/log/config during upgrade of docker images [forum.gitlab.com]

- How to run psql in Gitlab Docker image [techoverflow.net]

- permission denied to create extension “btree_gist” [github.com/sameersbn]

- Gitlab upgrade issue today: reconfigure - ERROR: permission denied to create extension “btree_gist” [gitlab.com/gitlab-org]

- Gitlab CE Doesn't Add a Public Key to authorized_keys [stackoverflow.com]

- Push to gitlab.com registry gives ‘access denied’ [gitlab.com/gitlab-org]

Summary

Read the documentation before attempting to upgrade. To be safe you need to take one or more point releases from every major version between where you are and where you want to go. If you run a dockerized instance with bind-mounted volumes you may have to scoot some files around manually where the upgrade process leaves you hanging. Watch out for missing files/binaries and postgres extensions!

Steps to Freshen Up Your Installation

To go from 12.10.x to 14.5.2:

-

If you run gitlab over HTTPS (which is strongly recommended!) Ensure that your certificate is up to date

- An invalid certificate can result in a hung page with no information or context on what is wrong

- This is worth checking if you are stick with a page that doesn't load

-

In the event that you are bind-mounting volumes, ensure that you are prepared with configs in the expected place:

- docker exec cp /var/opt/gitlab/redis/redis.conf /opt/gitlab/sv/redis/log/config

- docker exec cp /var/opt/gitlab/gitaly/config.toml /opt/gitlab/sv/gitaly/log/config

- docker exec cp /var/opt/gitlab/postgresql/data/postgresql.conf /opt/gitlab/sv/postgresql/log/config In my case I found that the /opt/gitlab/sv directory was missing a few configs that I had to bring over manually

-

Upgrade to the latest version of the point release you are currently tracking (like 12.10.14-ce.0)

- Note: For every release you upgrade to, be sure to navigate around and make sure pages are loading as you'd expect. It's easier to fix problems as they arise than wait for a big ball of problems at the end.

-

Upgrade to 13.0.4

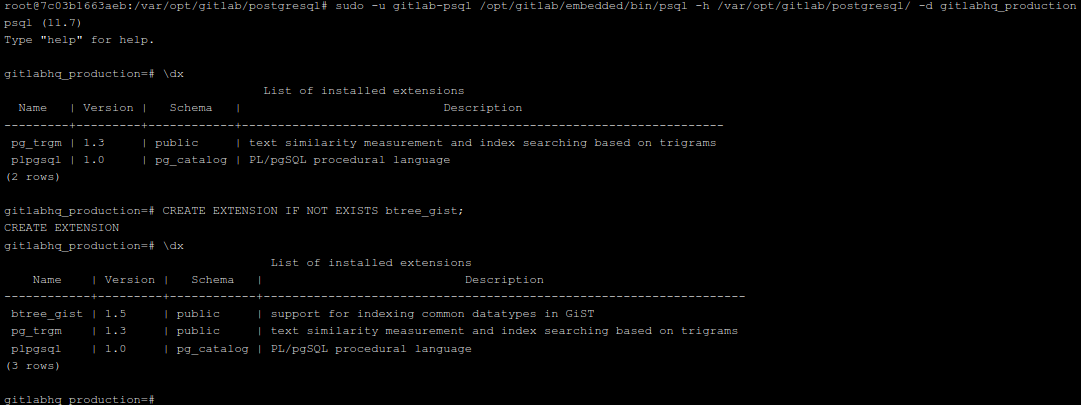

- This may break your postgres due to a lack of btree_gist

- If so, fix it like this:

- Shell into your container

- sudo -u gitlab-psql /opt/gitlab/embedded/bin/psql -h /var/opt/gitlab/postgresql/ -d gitlabhq_production

- \dx to show extensions in postgres

- CREATE EXTENSION IF NOT EXISTS btree_gist;

- \dx to confirm that btree_gist is installed:

-

Upgrade to 13.4.3-ce.0

-

Then upgrade to 13.8.8-ce.0

-

Another upgrade to 13.12.10-ce.0

-

Yes, another upgrade to 13.12.12-ce.0

-

Upgrade to 14.0.11-ce.0

-

Upgrade to 14.1.6-ce.0

- Urgent note: Wait for the background migrations to complete or be willing to suffer the consequences! https://docs.gitlab.com/ee/update/#batched-background-migrations

-

Another upgrade to 14.1.8-ce.0

-

Again to 14.2.7-ce.0

-

Again to 14.3.6-ce.0

-

Again to 14.4.4-ce.0

-

Finally to 14.5.2-ce.0

At this point you should be upgraded to the latest and shiniest version of gitlab. Hopefully you won't get so far out of date in the future ;)

What's the Damage?

After upgrading I found that git pull/push would not work. There were issues like this:

#

# Prompting for a password instead of using my ssh-key

git clone git@glab.domain.tld:projects/containersploiter.git

Cloning into 'containersploiter'...

The authenticity of host 'glab.domain.tld (192.168.0.20)' can't be established.

ECDSA key fingerprint is SHA256:ATgEKcoTsoJuF/.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added 'glab.domain.tld' (ECDSA) to the list of known hosts.

git@glab.domain.tld's password: #

# SSH to gitlab does not show the welcome message

$ ssh git@glab.domain.tld

debug2: resolving "glab.domain.tld" port 26

debug2: ssh_connect_direct

debug1: Connecting to glab.domain.tld [10.2.0.20] port 26.

debug1: Connection established.

debug1: identity file /home/user/.ssh/id_rsa type 0

debug1: identity file /home/user/.ssh/id_rsa-cert type -1

debug1: Local version string SSH-2.0-OpenSSH_8.2p1 Ubuntu-4ubuntu0.3

kex_exchange_identification: Connection closed by remote host#

# Cloning the project shows a message about access rights that seems off given how I have it configured(?)

$ git clone git@glab.domain.tld:projects/containersploiter.git

Cloning into 'containersploiter'...

debug2: resolving "glab.domain.tld" port 26

debug2: ssh_connect_direct

debug1: Connecting to glab.domain.tld [192.168.0.20] port 26.

debug1: Connection established.

debug1: identity file /home/user/.ssh/id_rsa type 0

debug1: identity file /home/user/.ssh/id_rsa-cert type -1

debug1: Local version string SSH-2.0-OpenSSH_8.2p1 Ubuntu-4ubuntu0.3

kex_exchange_identification: Connection closed by remote host

fatal: Could not read from remote repository.

Please make sure you have the correct access rights

and the repository exists.The root cause of these issues was that sshd was missing from my upgraded deployment. To address this:

- Download the latest docker-ce image to your computer

- Save it to a tar file: sudo docker save gitlab/gitlab-ce:14.5.2-ce.0 > gitlabce.tar

- Unpack the tar and extract every layer

- Look inside each layer for the sv directory

- Compress the contents of the sshd dir: tar czf sshd.tgz /path/to/sv/sshd

- Send it/upload it to the container -or- unpack it in your bind-mount to the right location:

- /opt/gitlab/sv

- Restart your container

Additionally, I found I had to run this command manually to ensure my ssh key was included in the authorized_keys file on gitlab:

sudo gitlab-rake gitlab:shell:setup

This allowed my pub key to be used for pulling/pushing over SSH